Introduction

The current OpenAPI parsing and handling tools are not geared towards pentesting an API. We created Swagger-EZ to make getting up and running with API pentesting faster and less painful. The Github repository is here.

When auditing an API it is fairly common that we are supplied some kind of OpenAPI specification and the end point which that specification is intended for. This is great because it gives you a definition to work from while testing the API rather than having to try and formulate each request based around documentation. The problem with using OpenAPI for pentesting an API is that it can be very time consuming to go from a raw definition file to the point which you have all the requests in a proxy like Burp Suite and are actually testing the API.

If you’re not familiar with OpenAPI:

The OpenAPI Specification, originally known as the Swagger Specification, is a specification for machine-readable interface files for describing, producing, consuming, and visualizing RESTful web services.[1] Originally part of the Swagger framework, it became a separate project in 2016, overseen by the Open API Initiative, an open source collaborative project of the Linux Foundation.[2] Swagger and some other tools can generate code, documentation and test cases given an interface file.

It is typically either a JSON or YAML file which describes all the endpoints pertaining to a particular API and how to use all those endpoints. This includes all the parameters and formatting of the different requests needed to make valid requests to the API. Often they can also include examples of the different requests the API accepts.

Problems We Found Using Other Tools

Most of the OpenAPI parsing tools out there still require you to work through each individual request filling in some parameters or other information just to then be able to send each request through your proxy tool so you can work with the requests from there. It is tedious and time-consuming eating away the time you have scoped to be testing and scanning.

We wanted something that you could drop an OpenAPI file into and within a couple of minutes have the proper requests populated in a proxy tool. Swagger-EZ looks to accomplish this. It does not require you to work with each individual request, but instead allows you to edit and send requests in bulk to populate your proxy much faster and with less pain. Once in Burp Suite, the requests can be modified, tested and scanned with from there.

So many different formats

One initial issue with these definition files is that they can come in different formats i.e. Swagger 1.0, Swagger 2.0 JSON or YAML, API Blueprint and more. Not all formats are going to work with every tool designed for parsing and handling these files. We have found an easy solution to this is to try and convert to a common format that works with most tools. https://openapi.tools/ contains a list of useful tools for converting OpenAPI files. If the definition file is not sensitive, we typically use https://apimatic.io/transformer to do this as it accepts a lot of different formats.

https://apimatic.io/transformer

Swagger 2.0 JSON is one of the more widely used specifications and works with most tools out there. Converting into Swagger 2.0 JSON is a good bet that we will be able to use the file with any tools you might need while testing.

What To Do With It Now

Some of the tools we find most useful when working with Swagger files are Postman, Swagger-UI and Swagger-editor there is even a Burp Suite extension that will parse a Swagger JSON file.

- Postman is a great tool it provides a lot of functionality for working with these files. You can send properly formatted requests, modify requests and convert to different formats.

- Swagger-UI is meant to simply give you an interface to the API and it does a great job of this. Each request has its own section which parameters can be modified in and the request can be sent directly from the interface.

- Swagger-editor is great for debugging a bad definition file to get it into a working state.

- The Burp Suite extension is a nice addition, but it does not format any of the actual requests for you or allow you to fill out any of the parameters. It basically just loads the endpoints and request methods into Burp Suite for you.

These tools are all extremely helpful when testing an API based on a definition file. But as previously mentioned these tools all take quite a bit of time to walk through and configure and send each individual request.

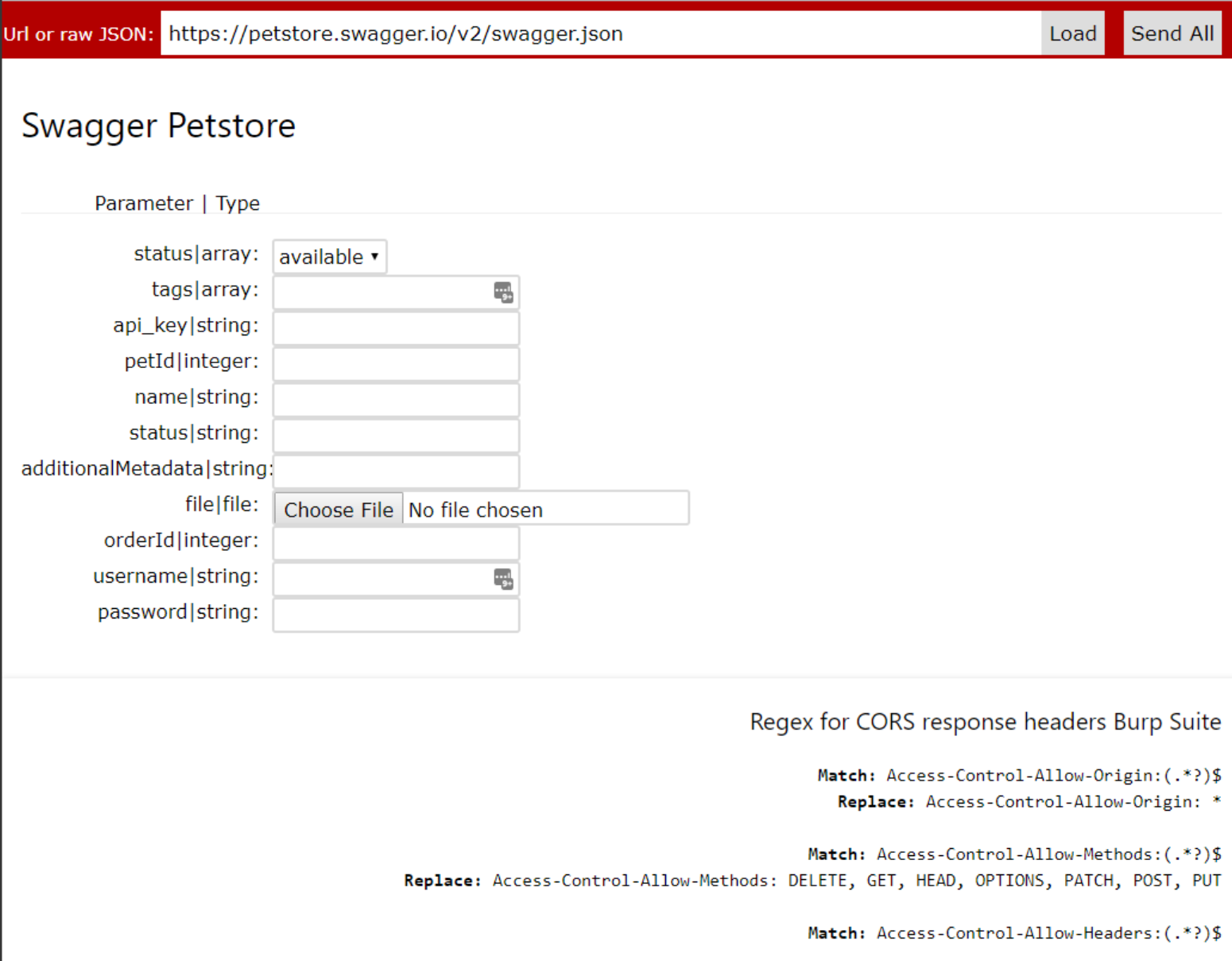

Instead of working through each requests individually Swagger-EZ will take in an OpenAPI specification URL or JSON blob and parse only the unique parameters allowing you to fill in each one with some valid test data. You can then send off all the requests to the API by just clicking one button. Pointing your browser at an intercepting proxy now allows you to have a populated site tree. Swagger-EZ was all built using the Swagger-js project and runs in your browser so it just requires opening it in your own browser. We have also hosted a version here for you to use or try out

https://rhinosecuritylabs.github.io/Swagger-EZ/

The interface will only show one instance of each parameter and its type for all the parameters in the specification file. This allows you to just fill each parameter in once instead of having to do this for potentially 10s or 100s of the same parameters. Once you have filled in your test data you can just hit “Send All” and each request is sent the way it is specified in the Swagger file.

To get these into Burp Suite or any other proxy, just configure your browser to use that proxy as you normally would when testing any other web application. Currently, there is no option for authorization on the interface (There will be in the future). For now, this can be handled by adding authorization headers through Burp Suite match and replace rules as needed.

Problems

Same Origin Policy can be a pain at times when trying to use different API tools which run in the browser like Swagger-UI or Swagger-EZ. If the server you are making requests to does not return the proper Cross Origin Resource Sharing(CORS) headers it will fail to read the responses from the API.

You will see a message like this in the browser’s console:

It may even fail to retrieve the Swagger JSON all together from that server. This is being handled in the tool in a couple different ways. If you cannot even retrieve the Swagger JSON by a URL because of CORS you can just copy and paste the Swagger JSON blob directly into the input field and it will parse it just the same.

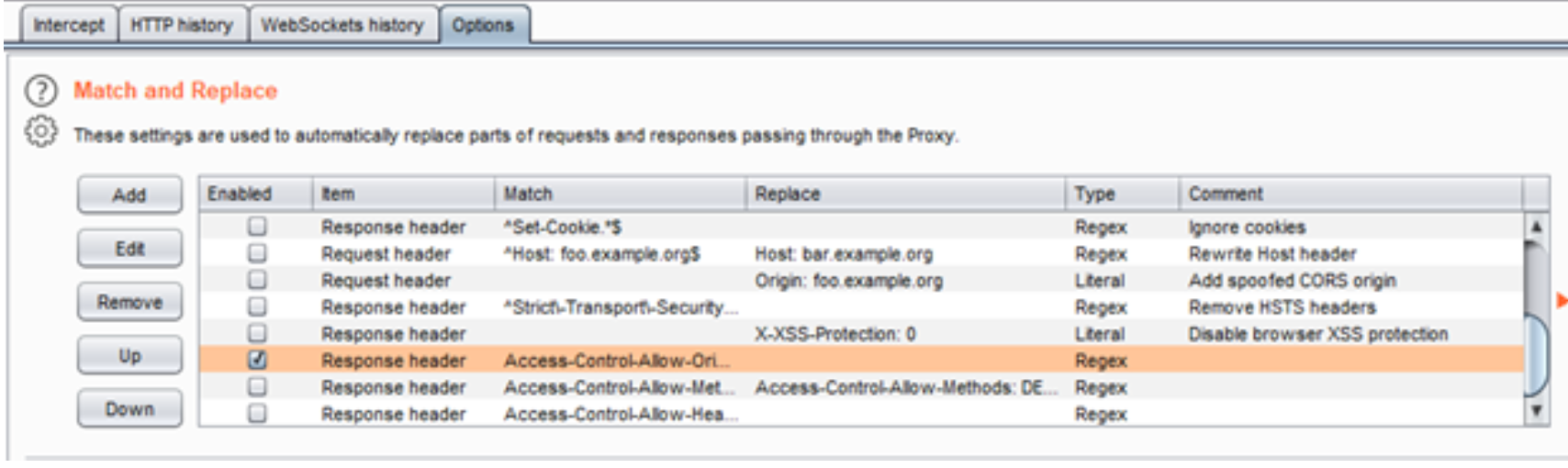

If you are receiving errors because CORS is blocking the responses from the actual API requests, you can just inject wide open CORS headers through Burp Suite. This can be done by creating a “match and replace” rule for the response header in the proxy options section of Burp Suite.

Some of the useful and common rules needed to do this are displayed on the Swagger-EZ page.

These will get around most instances but may need slight tweaking on a case by case basis. The best idea is to just keep your browser’s console (F12) open while using these in browser tools so you can watch for any error that you may be encountering.

Bad Definitions

While Swagger files are great and can make things easier, sometimes they fail. If the Swagger file you are working with has not closely followed the OpenAPI specification they can often break the tools built to work with them. Much of the time these issues can be debugged fairly easily and you can get the Swagger to a working state. To do this it is best to use the Swagger-editor. This tool gives you the JSON or YAML file on the left which you can edit in real time and will show the Swagger-UI with the errors on the right.

https://editor.swagger.io/

This way you can check the errors and work through each one debugging in real time. Often it just takes modifying a couple parameters or strings in the definition file to clean up the errors and allow it to work.

Conclusion

Hopefully, some of the methods and tools discussed here will make API testing a little easier and more successful for you. The API tool can be found here If you want to clone it for local use. A version to try out is also hosted here. You are likely to get better results by cloning it and running locally.