Background: Amazon Go as a Technology

Earlier this year, Amazon introduced Amazon Go, a brand-new kind of grocery store featuring automated check-out lines and no cashiers! These stores are poised to revolutionize the way that people shop for groceries.

On a very high level, this is how the store works: First a customer will download the Amazon Go mobile app and sign in with their Amazon account, which has their credit card attached. Shoppers can enter the store, scan a barcode that the app displays which opens the turn-style-like doors, and then walk around as they shop normally. Instead of stopping at the cash register, customers literally just walk out of the store with all the different items they picked up. Amazon can detect what items they left with, so shortly after leaving, the customer receives a charge on their credit card for the correct price.

Amazon Web Services Simple Storage Service (S3), provides buckets that store files and information for many different use-cases. Amazon Go utilizes AWS S3 and that is where this vulnerability comes in to play.

Old Bug, New Application: AWS S3 Bucket Permissions

Recent examination of multiple enterprise environments revealed that organizations utilizing Amazon Web Services (AWS) are having difficulty fully securing all edges of organizations. These edge security issues include many public readable/writeable AWS S3 buckets, exposed key pairs, unauthenticated databases, and more. This blog post will detail a misconfiguration that was found in the Amazon Go mobile application, allowing an authenticated user to upload arbitrary files to the Amazon Go S3 bucket.

Rhino Security Labs worked with Amazon Go to get this issue corrected, but this example demonstrated how common — and critical — configuration issues are within the AWS cloud.

Amazon Go Attack Narrative

The Amazon Go store had just recently opened. Having found high impact vulnerabilities in Amazon Key previously, our researchers were interested in testing Amazon Go and its connected systems.

Having previously tried to intercept API requests from the mobile app with BurpSuite (unsuccessfully), our research team headed over to the physical Amazon Go store (in Seattle, WA), packed with a laptop, a wifi hotspot, and a phone. Our goal was to try to intercept traffic from the mobile app with Burp while someone was inside the store, actively shopping to see if there are requests firing off as customers enter the store, pick up/set down items, move about the store, and eventually leave.

Taking Our Research Mobile

We conducted a few tests with BurpSuite and waited for the results. What we found was a request that was returning a JSON object containing the keys ”accessKeyId”, “secretAccessKey”, “sessionToken”, “url”, and “timeout”. The keys and session token were AWS credentials, the URL was an AWS Simple Queue Service (SQS) URL and the timeout was around 1.5 seconds. After analyzing it a bit, we found that the SQS URL format was https://sqs.[region].amazonaws.com/[aws account id (might be the same for all users)]/DeviceQueue_[customer id]_[mobile device id].

Next, we needed test the access key, secret key, and session token on AWS to see if they were valid credentials. Due to the timeout, the keys didn’t exist on AWS when we tried them (obviously). To circumvent this, we wrote a python script to make the initial request which would return the credentials and then quickly try to make subsequent requests using those returned credentials.

As one might expect, based on the included AWS SQS URL that was returned with the keys, polling and deleting messages for that specific SQS URL both worked. We tried many other general permissions, but we were not able to access anything else.

In the request, there is a header “X-Amz-Target: com.amazon.ihmfence.coral.IhmFenceService.getTransientQueue” being sent. As we determined later, the value of that header is clearly a reference to a class in the Java programming reference, which is what Android applications are written in.

Static Analysis Phase

It was time to perform static analysis on app to see what that “X-Amz-Target” header was all about. We transferred the app to one of our computers, and then decompiled the .apk file to Java using a tool known as JADX (https://github.com/skylot/jadx), so that we could comb the source in a more human readable format.

Before diving very deep, we searched for the class that the “X-Amz-Target” header was referencing, which was “getTransientQueue”, and we found “GetTransientQueueInput” and “GetTransientQueueOutput”, which were located under “com -> amazon -> ihm -> fence”. There were also many other classes in a similar format of “GetXYZ” that all seemed to belong to the class “FenceClient”, which was in the same spot.

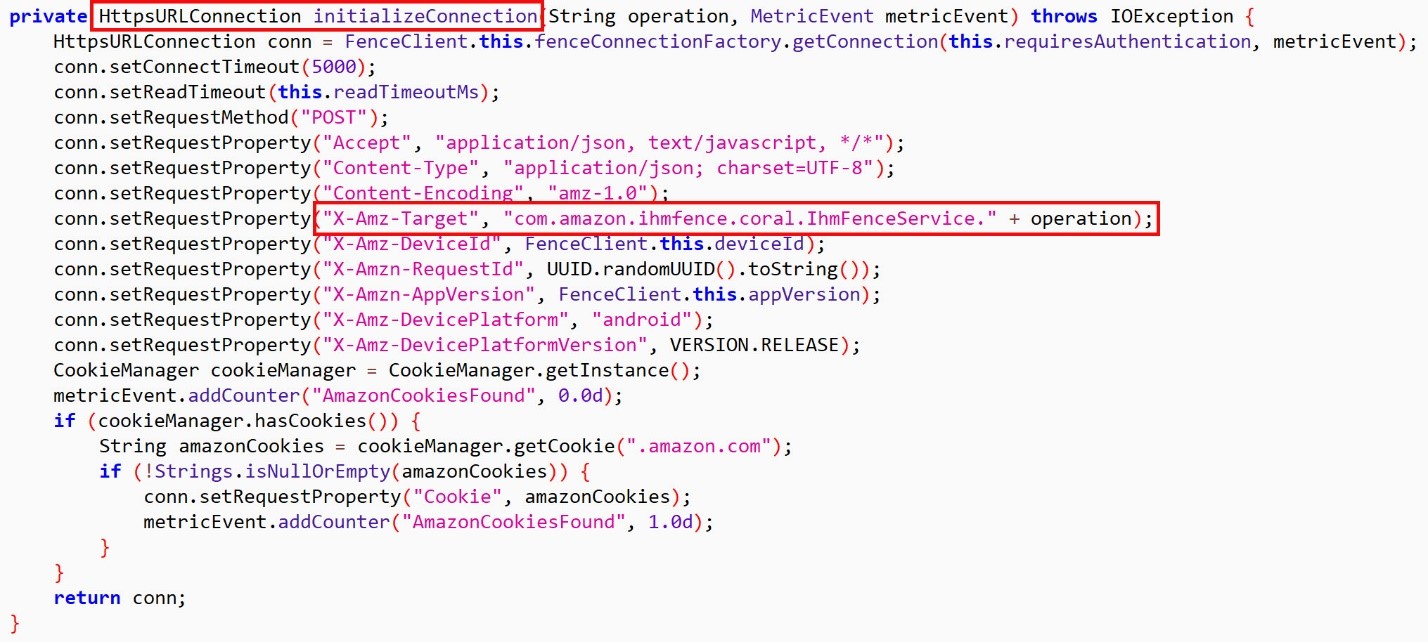

Browsing through the “FenceClient” class, we found quite a bit of information. First, the function that handles the HTTP requests we were intercepting, with a reference to the “X-Amz-Target” header from earlier.

In that same class, there were references to all the different options that could go in place of the “operation” variable in the picture. One of those was referred to as “getUploadCredentialsV2”, which sounded very promising.

Next, we used Burp Suite to repeat the same request, but we replaced the “X-Amz-Target” header value with “com.amazon.ihmfence.coral.IhmFenceService.getUploadCredentialsV2”, and again it responded with an access key, secret key, session token, and timeout value (though the naming conventions were slightly different and there was no SQS URL).

We needed the python script again, where we replaced the values in it to use “getUploadCredentialsV2” and test the permissions associated with those keys.

Before getting too far into just testing random permissions with those keys, we decided to snoop around the source code some more to find out what this function is used for. We made a quick search for where this function was being imported and used and came across the class “LoggingUploadService”.

Okay, so it is using those AWS keys for uploading logs. One function in that class, “onCreate”, included a variable “this.s3BucketName” which was assigned the value “ihm-device-logs-prod”. We checked the public permissions for this S3 bucket, but it was locked down.

Back to the python script, we added in some code that would use the AWS keys to test S3 permissions on that bucket. We tried many different permissions relating to S3 with the keys before trying to upload files, but none succeeded. We created a file “test.txt” and put the word “test” in it, changed the python script so it would attempt to upload a file to the S3 bucket… and it worked! The final version of the script below is also available in GitHub: https://github.com/RhinoSecurityLabs/Security-Research/blob/master/exploits/Amazon%20Go/s3-arbitrary-file-upload.py

#!/usr/bin/env python

import json, boto3, requests

# Make the request to getUploadCredentialsV2 which will return the AWS access key, secret key, and session token

response = requests.post('https://mccs.amazon.com/ihmfence',

headers={

'Accept': 'application/json',

'x-amz-access-token': 'my-x-amz-access-token',

'Content-Encoding': 'amz-1.0',

'X-Amz-DevicePlatform': 'ios',

'X-Amz-AppBuild': '4000022',

'Accept-Language': 'en-us',

'X-Amz-DeviceId': 'my-device-id',

'Accept-Encoding': 'gzip, deflate',

'Content-Type': 'application/json',

'User-Agent': 'Amazon Go/4000022 CFNetwork/808.0.2 Darwin/16.0.0',

'Connection': 'close',

'X-Amz-DevicePlatformVersion': '10.0.2',

'X-Amz-Target': 'com.amazon.ihmfence.coral.IhmFenceService.getUploadCredentialsV2',

'X-Amzn-AppVersion': '1.0.0'

},

cookies={

'ubid-tacbus': 'my-ubid-tacbus',

'session-token': 'my-session-token',

'at-tacbus': 'my-at-tacbus',

'session-id': 'my-session-id',

'session-id-time': 'some-time'

},

# Send an empty JSON object as the body

data='{}'

)

# Store the values returned in the response

obj = response.json()

access_key = obj['accessKey']

secret_key = obj['secretKey']

session_token = obj['sessionToken']

# Create an S3 boto3 resource

s3 = boto3.resource(

's3',

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

aws_session_token=session_token,

region_name='us-west-2'

)

# Upload my local ./test.txt file to ihm-device-logs-prod with the name test.txt

upload = s3.meta.client.upload_file('./test.txt', 'ihm-device-logs-prod', 'test.txt')

# Print the results

print(upload)

We were now able to upload arbitrary files to Amazon Go’s logging S3 bucket. Once uploaded, we couldn’t perform any other actions to that item though; it was just uploaded and out of our hands.

At this point we wanted to know what the actual files that are being uploaded looked like. We started off by searching the filesystem on a rooted Android device to locate any log files but found nothing. Assuming that the log files are deleted locally from the phone after they are uploaded to S3, we decided to put it into airplane mode and tried opening/closing/interacting with the app to create some logs then hopefully view them, because they couldn’t be uploaded and deleted without internet.

We ended up finding the logs with the name formatted as “ERRORDIALOG_Month _DD_YYYY_HH:MM:SS_log.gz”. The .gz file only included a single file which was the same name, but with no .gz. Opened in a text editor, it had a lot of errors about attempting to perform different actions and them failing because of no internet. The last task in the file was the LogUploadManager function, to which at that point, the next log file would be created. The format of the log file was rather simple and could easily be modified before being uploaded, potentially leading to another attack.

Here you can see a snippet from one of the log files trying to connect to the internet.

Vulnerability Impact

Uploading arbitrary files to a private S3 bucket allows an attacker to pack the bucket full of garbage files taking up a huge amount of space and costing the company money. While this may be less concerning to Amazon – the parent company of AWS itself – but the impact to a small to mid-sized organization can be much more devastating.

Other attack vectors exists here as well, including infecting logs or other files already being uploaded and resulting in malicious execution of data.

Disclosure Timeline

02/25/2018 – Rhino Security Labs notifies Amazon Go of vulnerability

02/26/2018 – Amazon Go replies with a reference number for the disclosure communication

03/04/2018 – Rhino Security Labs contacts Amazon Go to request status

03/05/2018 – Amazon Go replies that the issue has been fixed

03/27/2018 – Full Disclosure

Conclusion

AWS is a complex cloud environment where proper configuration is difficult for even the most experienced users. It is essential as you create your applications and deployments that you validate your configurations through penetration testing on your AWS environment. By taking these steps, you can reduce the likelihood of a configuration error and help ensure that your systems are secured.